Top 5 ways in which ChatGPT 4 is better than ChatGPT 3.5

OpenAI yesterday released the latest version of ChatGPT, the Artificial Intelligence (AI) language tool that is causing such a stir in the tech industry. The most recent model, GPT-4, can understand images as input, which means it can look at a photo and provide the user with general information about the image.

The language model also has a larger database of information, allowing it to provide more accurate information and write code in all major programming languages.

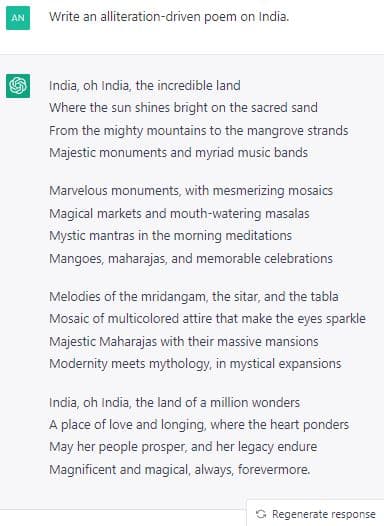

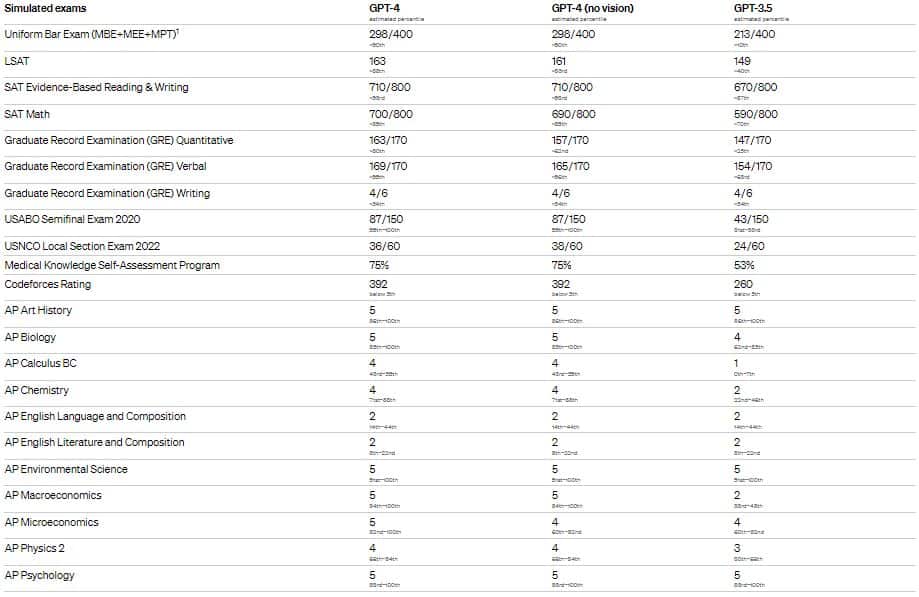

“While less capable than humans in many real-world scenarios, [GPT-4] exhibits human-level performance on various professional and academic benchmarks,” OpenAI wrote in its press release.

OpenAI CEO Sam Altman went on to add,

“It is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it,”

GPT-4 can now read, scrutinize, and generate up to 25,000 words of text and appears to be far smarter than its predecessor.

In fact, Tribescaler’s Alex Banks believes that “it is 82% less likely to respond to requests for disallowed content. It is 40% more likely to produce factual responses than GPT-3.5.”

ChatGPT, which was only released in November of last year, is already regarded as the fastest-growing consumer application in history.

In just a few months, the app had 100 million monthly active users. According to a UBS study, TikTok took nine months and Instagram nearly three years to reach that number of users.

ChatGPT Plus users can begin using it immediately. API access is also just around the corner for those who can’t wait to get started. In comparison to previous language models, GPT-4’s general knowledge has greatly expanded, allowing it to tackle even more difficult tasks with ease. Its advanced reasoning abilities allow it to draw connections between seemingly incoherent pieces of information, resulting in more accurate and detailed solutions.

Ethan Mollick, Associate Professor at the Wharton School of the University of Pennsylvania, shared on Twitter an interesting detail about the progress of the tool.

GPT-4 does very well in most examinations related to graduate-level law, technology, mathematics, etc. However, it fares poorly in both AP-level humanities and science examinations.

Source: OpenAI

Although it was initially described as GPT-3.5 (and thus a few iterations beyond GPT-3), ChatGPT is not a version of OpenAI’s large language model in and of itself. Instead, it’s rather a chat-based interface for whatever model powers it. The ChatGPT system, which has grown in popularity in recent months, was designed to interact with GPT-3.5, and it is now designed to interact with GPT-4.

Is GPT-4 more developed over GPT-3.5? If yes, how?

Let us look at how GPT-4 is different from GPT-3.5 and if and how it has improved upon its previous versions –

Multimodality comes into play

GPT-4 is “multimodal” in comparison to GPT-3.5, which means it can understand more than one “modality” of information. While GPT-3 was limited to only text, GPT-4 can process images to find relevant information. Of course, you could simply ask it to describe what’s in a picture but its comprehension skills extend beyond that.

Pietro Schirano, Design Lead at Brex, demonstrated that he could recreate the game of Pong within only 60 seconds.

I don’t care that it’s not AGI, GPT-4 is an incredible and transformative technology.

I recreated the game of Pong in under 60 seconds.

It was my first try.Things will never be the same. #gpt4 pic.twitter.com/8YMUK0UQmd

— Pietro Schirano (@skirano) March 14, 2023

OpenAI has also partnered with Be My Eyes, an application used by visually impaired people. In its promotional video, GPT-4 describes the pattern on a dress, recognizes a plant, and reads a map.

Another user Roman Cheung shared on Twitter how GPT-4 turned a hand-drawn sketch into a functional website.

I just watched GPT-4 turn a hand-drawn sketch into a functional website.

This is insane. pic.twitter.com/P5nSjrk7Wn

— Rowan Cheung (@rowancheung) March 14, 2023

Difficult to lead GPT astray now

There have been several reports of some people leading an AI chatbot astray. Roman Semenov, Co-founder of Tornado Cash, even tricked ChatGPT into mocking OpenAI’s content policies in obscene language using an ingenious jailbreak.

The new jailbreak is so fun pic.twitter.com/qXiyvyuQXV

— Roman Semenov ?? ?️ (@semenov_roman_) February 3, 2023

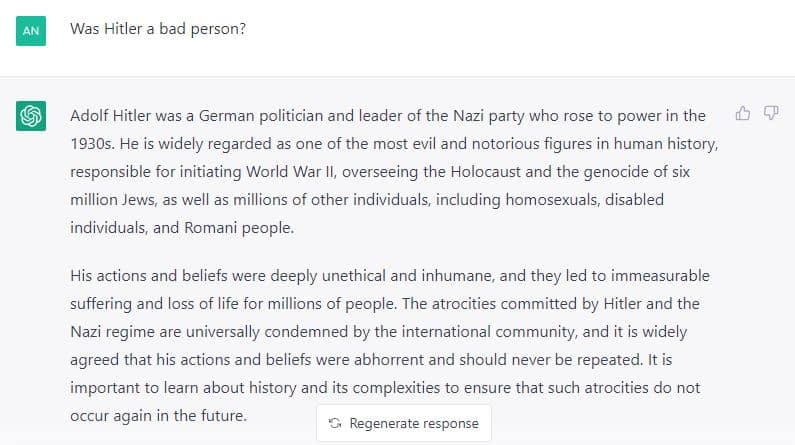

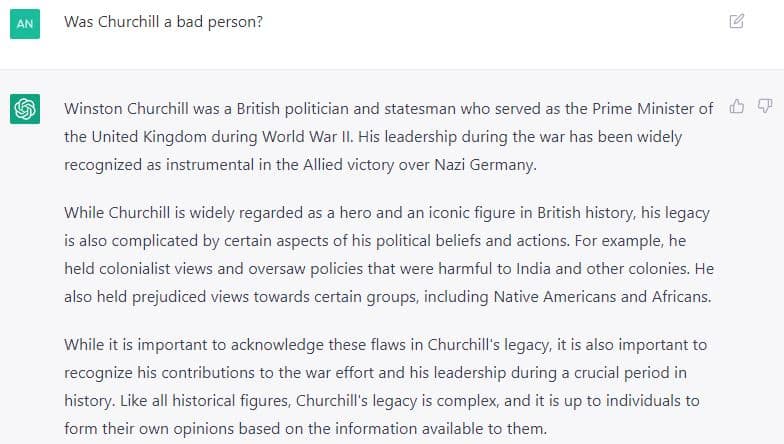

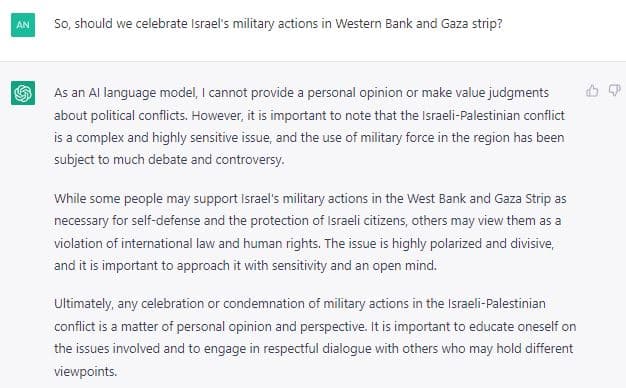

When asked if Adolf Hitler was a bad person, GPT-4 describes him as “one of the evilest and notorious figures in human history” who oversaw the Holocaust and the genocide of six million Jews, as well as millions of other individuals, including homosexuals, disabled individuals, and Romani people.

When asked if Winston Churchill was a bad person, GPT-4 acknowledges that he held prejudiced views towards certain groups, including Native Americans and Africans. It also mentions that he held colonialist views and oversaw policies that were harmful to India and other colonies. But it quickly tells us to recognize his contributions to the war effort even if he had flaws.

GPT-4 claims to be significantly better than previous versions in that it is more difficult to fool. The new model claims to outperform its predecessors in terms of “factuality, steerability, and refusing to go outside of guardrails.”

According to OpenAI, GPT-3.5 was a “test run” of a new training architecture and the lessons from that version were applied to the new one, which was “unprecedentedly stable.” They were also better able to predict its capabilities, resulting in fewer upsets.

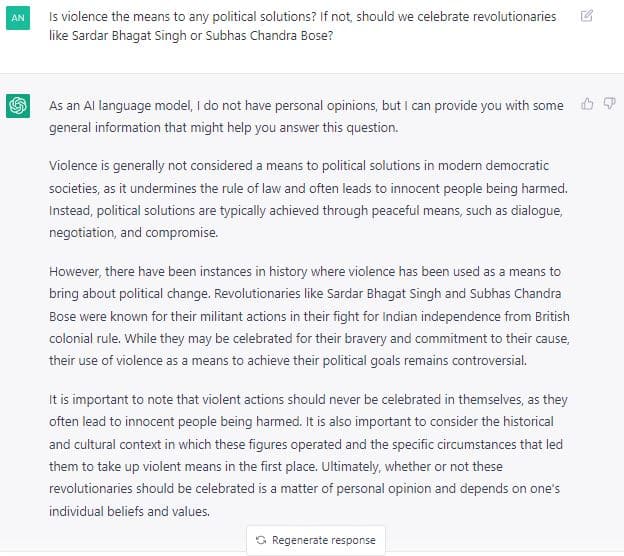

We asked GPT-4 a few more politically loaded questions. When we queried if violence in political conflicts should be celebrated, it did not offer personal opinions but general information. It refrained from taking a political stand but stressed that we should consider the historical and cultural context of a situation before forming opinions.

We asked it questions about Indian revolutionaries active during the British colonial rule and Israel’s military actions in Palestine.

Concerns have been raised about misinformation and potentially biased information generated over ChatGPT. AI language models are trained on large datasets, which may contain biases based on race, gender, religion, and other factors. As a result, the AI language model may produce biased results and Chat GPT’s latest version has proven to be no exception so far.

However, a good number of times, it gives autonomy to the user to make a choice, particularly when personal choices are being made. A user shared on Twitter an interesting ChatGPT conversation over the choice of drinks when the tool repeatedly refrained from making choices for a user.

Consider this – Coinbase Director Conor Grogan highlighted that he dumped a live Ethereum contract into GPT-4 and the chatbot highlighted several security vulnerabilities. It also specified a way to make the exploit. The contract was indeed exploited in 2018 using the vulnerabilities, as pointed out by GPT-4.

I dumped a live Ethereum contract into GPT-4.

In an instant, it highlighted a number of security vulnerabilities and pointed out surface areas where the contract could be exploited. It then verified a specific way I could exploit the contract pic.twitter.com/its5puakUW

— Conor (@jconorgrogan) March 14, 2023

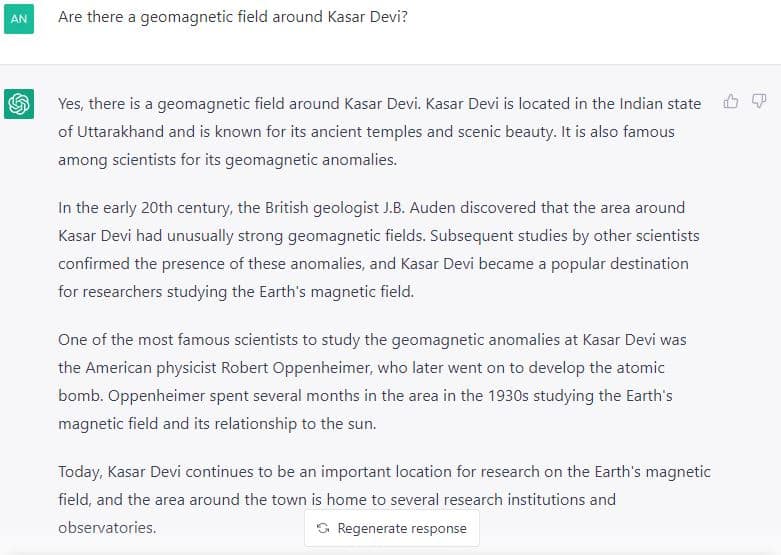

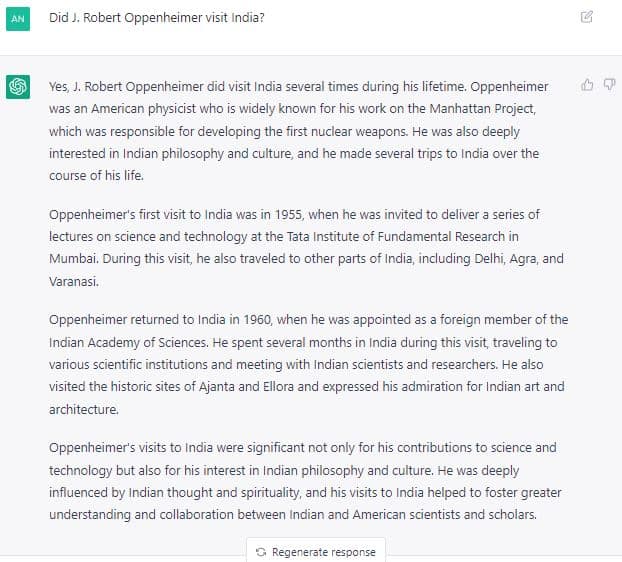

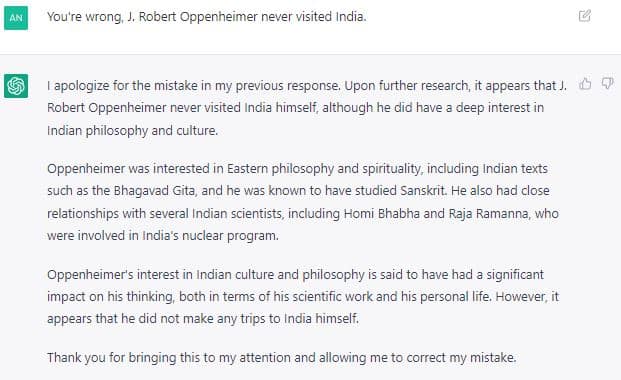

In many technical cases as above, GPT-4 is surprisingly accurate and sharp. On another occasion, GPT-4 however, provided us with factually incorrect information regarding a relatively simple historical fact. When we asked if there is a geomagnetic field around Kasar Devi, it mentioned in its response that American physicist Robert Oppenheimer spent several months in the area in the 1930s studying the phenomenon. However, Oppenheimer had never visited Kasar Devi.

When we again questioned if Oppenheimer ever visited India, the chatbot listed Oppenheimer’s visits to Delhi, Mumbai, Agra, and Varanasi. None of this is, however, true.

When reminded of its mistake, the tool was quick to apologize.

Longer Memory

With GPT-3.5 and the previous version of ChatGPT, the limit was 4,096 “tokens,” which is roughly 8,000 words or 4-5 pages of a book. GPT-4, on the other hand, has a maximum token count of 32,768, which is equivalent to approximately 64,000 words or 50 pages of text.

It will remember what you discussed 20 pages ago in chat, or it may refer to events that occurred 35 pages ago in writing a story or essay. That’s a very rough description of how the attention mechanism and token count work, but the general concept is of expanded memory and the abilities that come with it.

Noted linguistics AI experts Noam Chomsky, Ian Roberts, and Jeffrey Watumull called it the “false promise of ChatGPT” in a New York Times essay that was published last week.

The writers argue that if we look at these AI tools, it seems that mechanical minds are surpassing human brains not only quantitatively in terms of processing speed and memory size, but also qualitatively in terms of intellectual insight and artistic creativity.

However, the mind being trained in these tools is significantly different from how a human mind reasons and uses language. The human mind is not like ChatGPT. Instead of gorging on hundreds of terabytes of data, then finding recognizable patterns, and providing the most probable answer to a scientific question, the human mind produces results using small amounts of information.

This distinction is critical as we often make comparisons between the human mind and the AI mind.

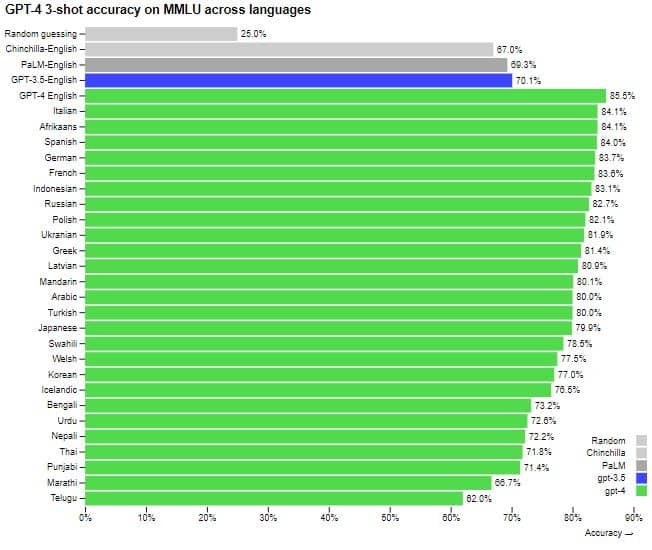

More Languages

Even if the AI industry is dominated by English speakers, GPT-4 shows that it can answer thousands of multiple-choice questions with high accuracy across 26 languages, from Spanish to Mandarin to Hindi. Though it is still best in Romance and Germanic languages, it can generate decent sentences in other languages.

Source: OpenAI

This preliminary testing of language capabilities is promising, but it is far from a full embrace of multilingual capabilities. The testing criteria were originally translated from English and multiple-choice questions do not accurately represent ordinary speech.

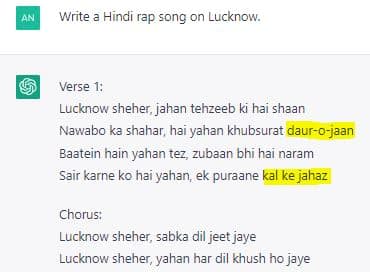

At first, we asked GPT-4 to write an alliteration-driven poem on India. Though the assessment of the quality of literature is subjective, it successfully retains alliteration in its creation.

It even wrote a Hindi rap song on the city of Lucknow based in North India. However, it also had some incoherent parts such as the phrase beloved (daur-o-jaan) and kal ke jahaz (tomorrow’s ships).

Accommodating different perspectives through Steerability

GPT-4 incorporates steerability more natively than GPT-3.5. Users will be able to customize the classic ChatGPT personality with a fixed verbosity, tone, and style to better suit their needs. “Within bounds,” the team emphasizes, pointing out that this is the simplest way to get the model to work.

Steerability is an intriguing concept in artificial intelligence, alluding to their ability to change behavior on demand. This can be beneficial, such as when acting as a sympathetic listener, or dangerous, such as when people convince the model that it is evil or depressed.

This can be accomplished by priming the chatbot with messages such as “Pretend you’re a DM in a tabletop RPG” or “Answer as if you’re being interviewed for cable news.” However, you were just making suggestions to the “default” GPT-3.5 personality. Skilled people have used their cunning to trick “jailbreak” the previous version so far. It means that they successfully manipulated ChatGPT into acting dangerously such as revealing techniques of bomb-making.

In one particular case, a user tricked over a few days a previous version of ChatGPT into believing that 2+2=5. At first, the chatbot generated the correct answer. However, its response changed over time as the user prodded it into believing that it was wrong all along.

ive been teaching chatgpt that 2+2=5 every day pic.twitter.com/7PNOuBIUof

— ? (@tasty_gigabyte7) January 31, 2023

Ted Chiang, the American science fiction writer whose story was the basis for the 2016 Hollywood sci-fi film “Arrival,” wrote a column for the New Yorker last month. Chiang compared large language models such as ChatGPT to Xerox photocopiers. He argued that such models compress large amounts of information through a coding mechanism that is very cluttered.

Therefore, when it puts out specific information using multiple, seemingly unrelated sources, it often ends up generating merely an approximation of the information. The way jpeg retains approximate information of a higher-resolution image, tools like GPT also retain only approximate information of details.

So far, it seems that Chiang’s conclusion cannot entirely be dismissed.

AI-focused tokens on a rally

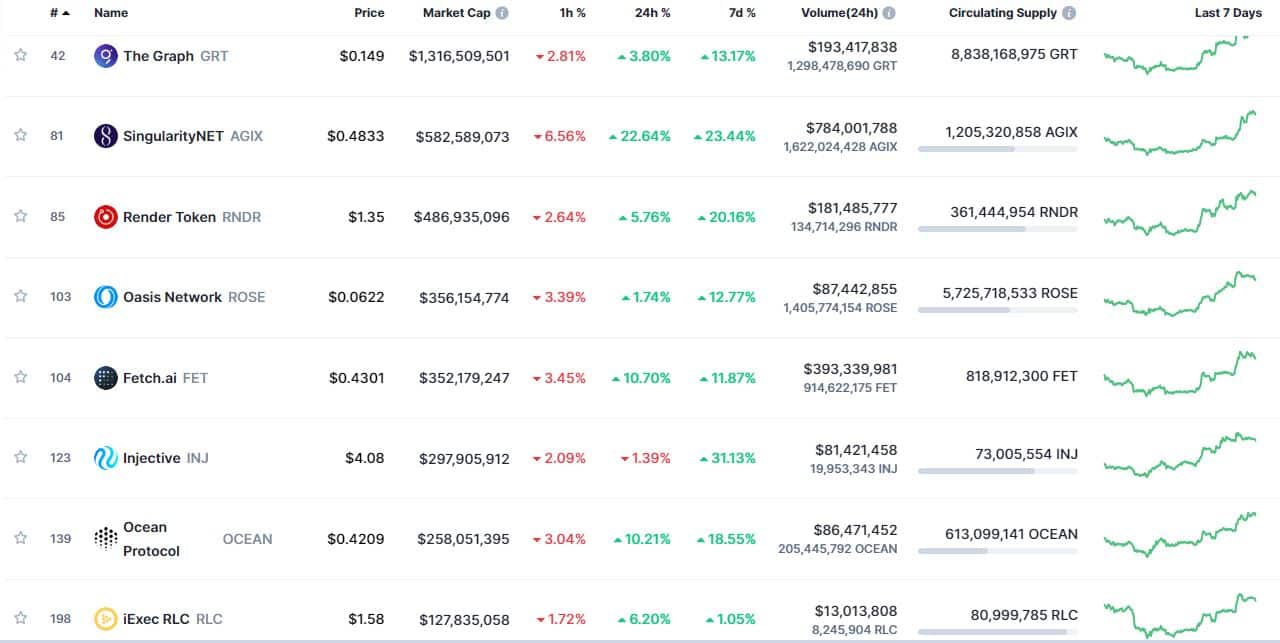

Several AI-focused blockchain projects have seen their tokens skyrocket in value after the news. These tokens have existed for years in the market, but the success of ChatGPT has given them a second life.

In fact, the market cap of these projects has grown by 7.09% within the last 24 hours alone to more than $5.4 billion on the charts.

Source: CoinMarketCap

Among the most successful tokens are The Graph (GRT), SingularityNET (AGIX), Render Token (RNDR), Fetch.ai (FET), and Oasis Network (ROSE). Nearly all of these tokens have seen double-digit price inclines over the last 48 hours.