For nearly 20 years, CPUs evolved at an incredible rate. Following Moore’s law, CPUs doubled in speed roughly every eighteen months until the mid-2,000’s, when companies began exploring new multi-core design. Eventually, processors would become quad core, then hex-core, then octo-core and more, as tech companies began searching for ways to design the fastest and most efficient machines.

Now, adding on additional cores to the CPU does not necessarily mean that the computer was faster, but rather allowed multiple programs to run at once. When the limits of single core processing were thought to have been reached, engineers devised clever ways to keep increasing the power and capabilities of computers.

The closest thing to a blockchain equivalent of a CPU would be a node. A node is any electronic device that is connected to a blockchain’s network and stores a copy of that blockchain. Nodes take care of three aspects of a blockchain. First, they are in charge of the computational component. This is the component which most people will understand as hashing the transactions and creating the blocks.

The second element is the storage of the results in the ledger and the third component is the consensus, that is, verifying the data is correct. The first and third elements are generally reliant on the computational power of each node and the speed each transaction can be processed. The storage is reliant on a slightly different aspect of a node’s performance.

The current node setup is where one node is made up of one computer, essentially a single core CPU. The problem with this is that in order to improve the performance of the network you must improve the performance of every single node. Improvement is definitely needed. We continue to see instances where blockchains become congested; they slow down or become too expensive to be used.

This directly relates to the performance of the nodes. Engineers have had to be more intuitive with their solutions, and it shouldn’t be a surprise that there have been a wide variety of ways that blockchain developers have sought to improve this technology.

Common solutions have been to try and increase block size (increasing the rate that information can be processed, but this also increases the rate at which the blockchain grows), writing simpler smart contracts, or improving the consensus mechanism to make the network less reliant on all nodes (which often comes with the side effect of losing some decentralization).

But none of these solutions address the issue at the heart of the blockchain scalability problem-that as a blockchain becomes more popular and successful, there will inevitably become a large backlog of transactions that will need to get verified with each successive block, and the blockchain will eventually slow down. This is further frustrated when smart contract platforms, like Ethereum, need to run non-competing smart contracts sequentially, taking up time and processing power.

One could theoretically add more performance to the one computer, but this quickly gets out of proportion quickly for the cost vs. benefit ratio. Put this aside then one would reach the physical limit of the developed technology.

But even before we reach this point, there are two more limiting factors that come into play, first by running 1 transaction at a time, there will clearly be limiting factors in that each transaction needs to be processed will take time and this minimum time cannot be reduced further. But on a second level, we have the physical write speed limit of the data storage. You cannot physically write data faster than the Hard-drive on which it is stored on.

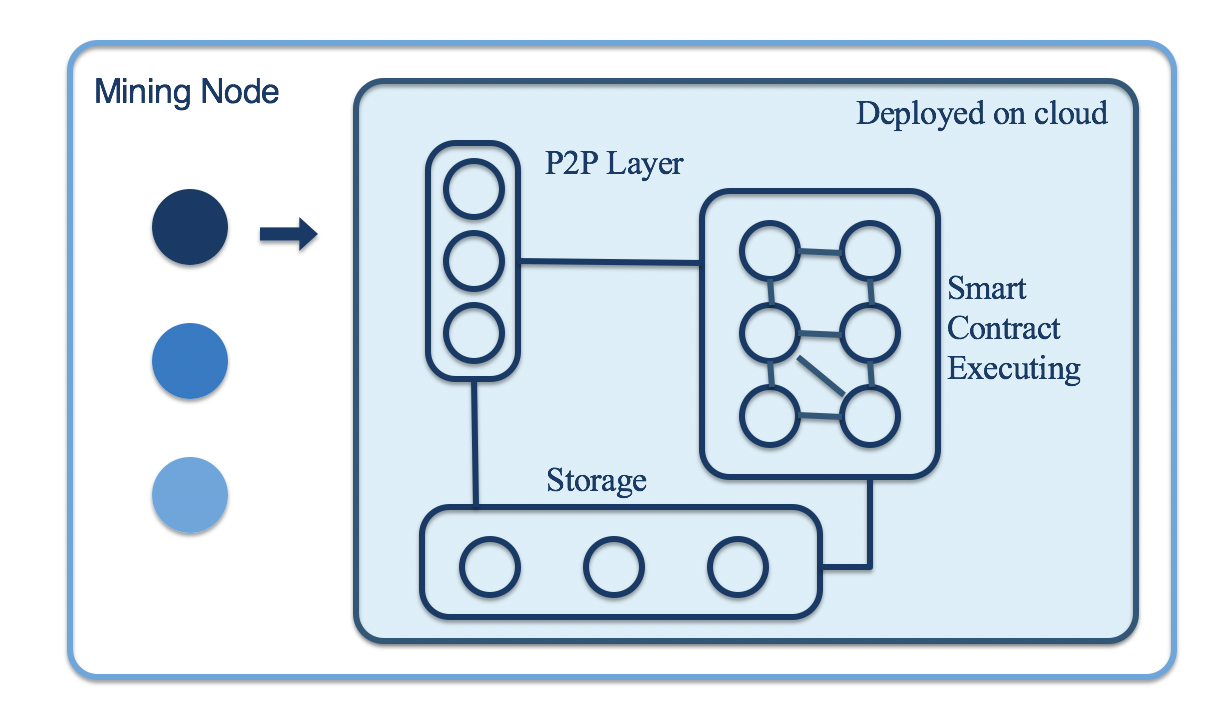

An approach which has eluded developers until now is the concept of adding more than one computer to an individual node. Similarly to a CPU which now runs multiple cores simultaneously, aelf has tackled this approach head-on. The problem with this lies in just two words, transaction dependency. I go into this in more depth in my article on parallel processing. But in essence, once transaction dependency has been resolved, then one can start adding multiple computers into the one node.

By creating nodes that are made up of multiple computers which can run in parallel, aelf is able to process non-competing transactions at the same time. Just like multiple cores in a processor allow a computer to run multiple programs at once, multiple computers in a node allow a blockchain to verify multiple transactions at once.

This also means the nodes are scalable – the natural problem to blockchain’s previous lack of node scalability. Computers can be added or subtracted from nodes, meaning that if the transactions grow more complex, or there are other changes in the blockchain, the nodes can adapt to meet the new demands of the blockchain. This flexibility is crucial for any project which plans to last well into the future.

This has fixed the computational component of blockchain speeds, but we still have the problem of data storage speeds. aelf has also come up with an innovative approach. That is, to split the data storage process from the computational processing component. To explain this in simple terms, you could say an aelf node will be split into two clusters. One cluster of computers will focus on the computational processes, while the second cluster will focus on the data storage component.

This has now removed the physical limiting factors for both layers. By default, the blockchain ledger will now be stored on a cluster of computers rather than on every single computer. Technically speaking, a complete ledger will still exist on every node.

By having this approach, aelf simply needs to add another computer onto a node in order to improve the scalability of the blockchain. Many projects talk about their blockchain as being scalable, but none have resolved these core issues in such a manner which future proofs it from bottlenecks. aelf is implementing a solution which is viable for the current and future needs of blockchain adoption.

They have also designed the ecosystem in such a manner to allow for it to evolve according to future needs. This allows elements like the Consensus Protocol to adapt, should a newer Protocol be developed with higher security, or a side chain’s requirements change.

Aelf has just announced that by using this approach they were able to create a stable testnet V1.0 which had a TPS of 15,000. For this to be the base that aelf is building on with such a scalable blockchain, it is no wonder such heavy hitters in the industry have backed them and joined aelf as partners. This includes Huobi, Michael Arrington, and FBG Capital just to name a few. Aelf is surely one of the blockchains to watch over the next 12 months as continue to announce new partnerships every few weeks and launch their mainnet in Q1 2019.

About the author:

Mappo has been investing and trading in fiat currencies since 2013. He has recently moved into the crypto world spreading his portfolio over long-term coin investments, ICOs and day trading.

To know more, click here.